Author: Lee Anderson

Date published: August 11, 2021

Date revised: September 14, 2021 to include Apple’s September 3 update.

The goal of the ILF is to identify child predators online. Related to that effort is empowering parents and guardians to educate their children and keep them safe online. We’ve provided some high-level information on how to leverage devices and their respective apps to create some guardrails for children who are still learning the ropes of the internet.

That guide mentioned that some of the security features built into Apple’s iOS limited the third-party monitoring options. In August 2021, Apple announced three new capabilities in an effort to protect children and combat child sexual abuse material (CSAM), commonly known as child pornography:

- Image communication safety in the Messages app for child accounts set up in Family Sharing

- iCloud Photos CSAM detection

- Updates to Siri and search regarding CSAM-related topics

Originally, these features were coming later this year in the US in updates to iOS 15, iPadOS 15, watchOS 8 and macOS Monterey. However as of September 3, Apple has announced that they are reanalyzing their time frame with no definite release dates. While these new features seem to overlap, there are distinct differences between each of them. The following information should help clarify what is happening.

Image Communication Safety in Messages App

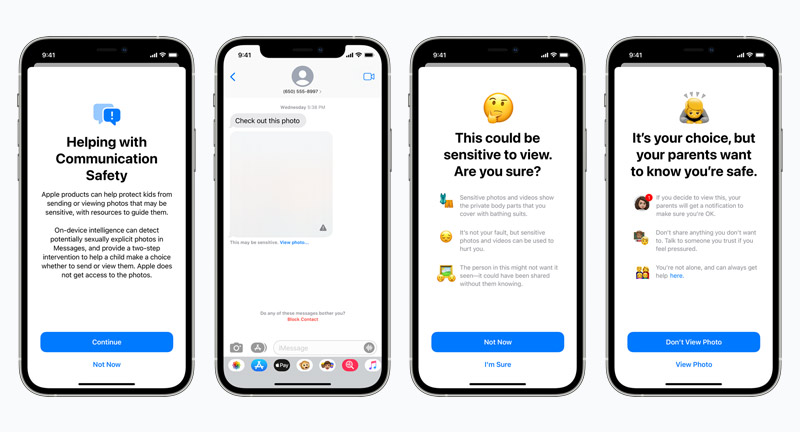

Apple’s Messages app has been designed for end-to-end encrypted communications. That means Apple cannot read messages sent between two parties. This is a security feature that ensures that messages are only seen by intended recipients. The image communication safety feature protects young children from immediately seeing explicit images by blurring images and giving them a warning (as shown in the image below). This feature only works on the iOS Messages app for child accounts set up in Family Sharing.

Regarding the new communication safety feature, Apple states,

“The Messages app will add new tools to warn children and their parents when receiving or sending sexually explicit photos.

“When receiving this type of content, the photo will be blurred and the child will be warned, presented with helpful resources, and reassured it is okay if they do not want to view this photo. As an additional precaution, the child can also be told that, to make sure they are safe, their parents will get a message if they do view it. Similar protections are available if a child attempts to send sexually explicit photos. The child will be warned before the photo is sent, and the parents can receive a message if the child chooses to send it.”1

Image Source: Apple | https://www.apple.com/child-safety/

This activity all happens on the child’s phone, so these communications are protected by the privacy inherent to the Messages app and are not accessible by Apple or any other third party. If a child receives an explicit image and these controls are set up, the child can choose to unblur the image. If they are 12 years old or younger, unblurring the image will result in a notification being sent to the Family Sharing account holder (presumably their parents). Additionally, if that child chooses to send a sexually explicit image, before sending, the app will warn them that if they proceed, their parents will be notified. The blurring of images can still be configured for children 13-17, but notifications aren’t sent to a parent’s device if they choose to unblur them.

iCloud Photos CSAM Detection

The National Center for Missing and Exploited Children defines CSAM as “the sexual abuse and exploitation of children.”2 Apple states, “To help address this, new technology in iOS and iPadOS will allow Apple to detect known CSAM images stored in iCloud Photos. This will enable Apple to report these instances to the National Center for Missing and Exploited Children (NCMEC). NCMEC acts as a comprehensive reporting center for CSAM and works in collaboration with law enforcement agencies across the United States.”1

To understand how this works, we need to understand image hashing. A “hash” is a function that converts a file into a unique, fixed-length series of letters and numbers. The concept of image hashing is “the process of using an algorithm to assign a unique hash value to an image. Duplicate copies of the image all have the exact same hash value. For this reason, it is sometimes referred to as a ‘digital fingerprint’.”3

The specific technology Apple is using is called NeuralHash, which “analyzes an image and converts it to a unique number specific to that image. The main purpose of the hash is to ensure that identical and visually similar images result in the same hash, while images that are different from one another result in different hashes. For example, an image that has been slightly cropped, resized or converted from color to black and white is treated identical to its original, and has the same hash.”4

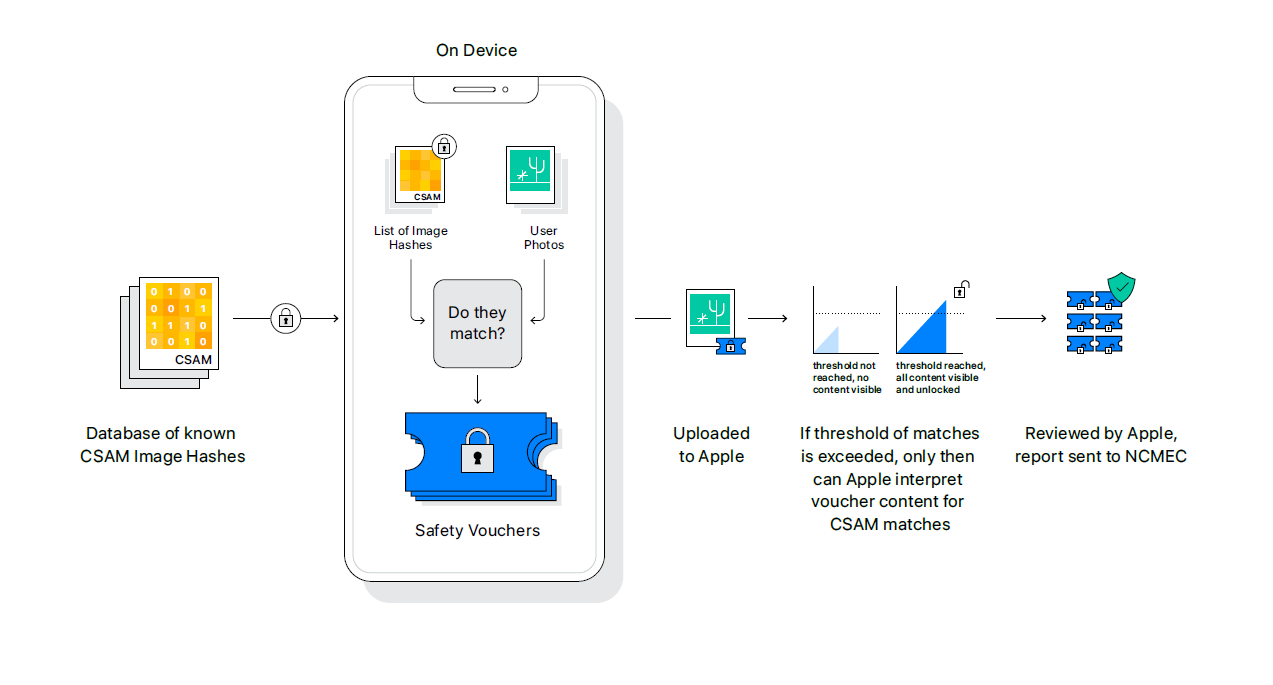

A database of hashes of known CSAM images are stored on Apple devices (not the images, just the string of random characters). Before any images are uploaded to iCloud, they are put through the hashing algorithm and compared against the database of known CSAM images.

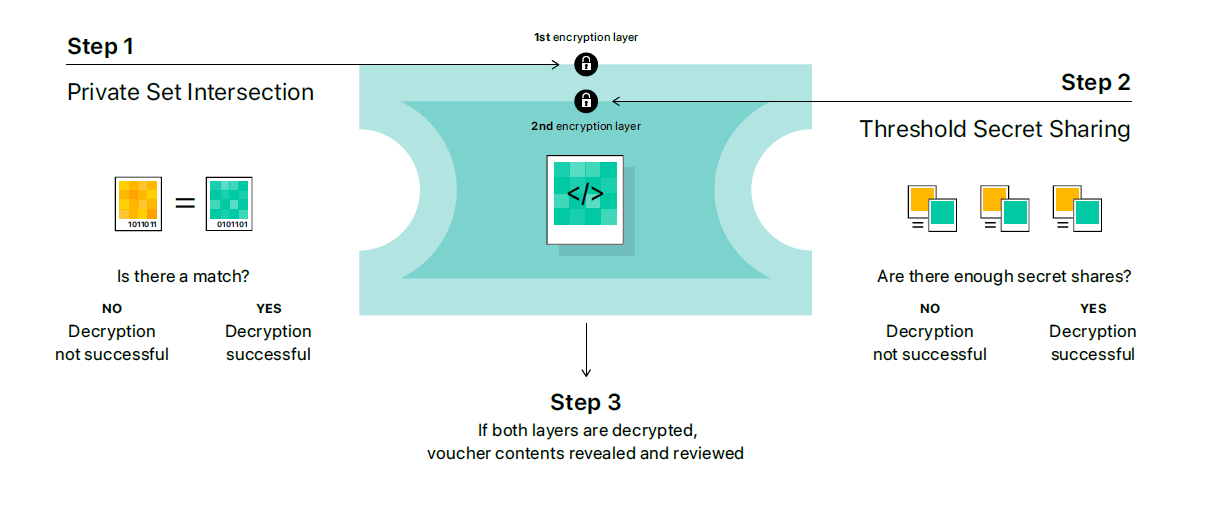

If there is a match, Apple says, “the device then creates a cryptographic safety voucher that encodes the match result. It also encrypts the image’s NeuralHash and a visual derivative. This voucher is uploaded to iCloud Photos along with the image. Using another technology called threshold secret sharing, the system ensures the contents of the safety vouchers cannot be interpreted by Apple unless the iCloud Photos account crosses a threshold of known CSAM content.”4

Image Source: Apple | https://www.apple.com/child-safety/pdf/Expanded_Protections_for_Children_Technology_Summary.pdf

Once a threshold of so many safety vouchers are met (a certain number of CSAM images uploaded to iCloud), Apple will disable the user’s account and send a report to the National Center for Missing and Exploited Children (NCMEC). If a user feels their account has been mistakenly flagged they can file an appeal to have their account reinstated. There is a very small chance of false positives, but Apple asserts, “The system is very accurate, with an extremely low error rate of less than one in one trillion per account per year.”4 We do not know the exact number for the image threshold yet, and we assume the threshold is in place to reduce the number of false positive reports sent to Apple.

Image Source: Apple | https://www.apple.com/child-safety/pdf/Expanded_Protections_for_Children_Technology_Summary.pdf

Updates to Siri and Search

Apple is updating Siri and Search by “providing additional resources to help children and parents stay safe online and get help with unsafe situations. For example, users who ask Siri how they can report CSAM or child exploitation will be pointed to resources for where and how to file a report.”1

Siri and Search will also be updated to intervene when they detect users trying to search for CSAM-related topics. These interventions will explain that the search they are attempting is harmful and problematic, and will additionally provide resources from Apple partners to get help with the issue.

Summary

Apple is taking some important steps in protecting children from being abused. These measures are not all implemented by default. If these new features would benefit your family, please look carefully at how to properly set them up for your children’s devices. While these technical measures do a lot of good, they should only be supplemental to having open and honest communication with your children.

Note: Content is subject to change as we follow Apple’s announcements about these features.

1 Apple. “Child Safety.” Apple, 5 Aug. 2021, www.apple.com/child-safety.

2 “Child Sexual Abuse Material.” National Center for Missing & Exploited Children, www.missingkids.org/theissues/csam. Accessed 11 Aug. 2021.

3 “INHOPE | What Is Image Hashing?” INHOPE, inhope.org/EN/articles/what-is-image-hashing. Accessed 11 Aug. 2021.

4 “A Day in the Life of Your Data.” Apple, 2021. https://www.apple.com/child-safety/pdf/Expanded_Protections_for_Children_Technology_Summary.pdf.